Heh, I only just realized that the Warcraft III Battle Chest I picked up a while back has the Mac versions on the same CDs. Looks like I’ll have more than the default Tony Hawk 4 to play with on trips after all…

Category: Geek

Round One, Fight!

Out of my own sheer boredomcuriousity, I wondered how the different architectures in the systems I have stand up to each other, so I devised some simple, completely unscientific benchmarks.

Continue reading “Round One, Fight!”

Light Pollution

My living room at midnight:

Pity the poor guest who gets stuck sleeping out there…

(Though some are dim and close, there are 17 LEDs in that picture, with others possible that just weren’t on at the time.)

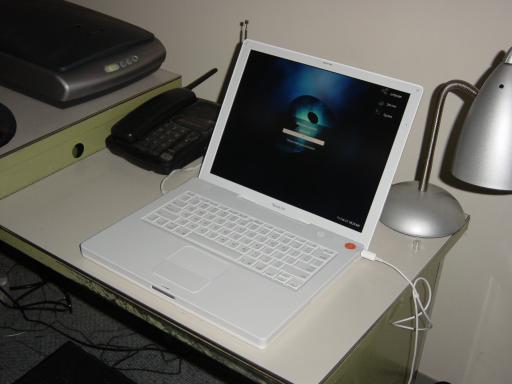

Say Hello To My Little Friend

The newest member of my little family, ‘ebotona’:

It’s an iBook G4, and although I’ve occasionally used Macs before, this is the first one I’ve actually ever owned. Why an iBook? Well, I always like to have something new to tinker with. My knowledge about Macs is about 10 years out of date, so it couldn’t hurt to get back up to speed on them, especially since I may get nailed with doing our OSX client port at work. I don’t really need a new full-blown desktop system, but I could occasionally use a laptop, so the iBook fills both needs; it’ll fulfill my travelling requirements, and still give me something new to fiddle with when at home.

So what was the first thing I did? Wipe OSX off and install Linux, of course… :-)

Continue reading “Say Hello To My Little Friend”

RFC on WTF RSS IGF

I recently tried out an RSS aggregator extension for my browser, and I have to say, I’m not really impressed so far. It *works*, but I find the following a bit annoying:

1) Inconsistent context. Some sites put the whole damn article in the RSS entries, some only put the first ‘x’ number of words.

2) Lack of comment information. Often I’m interested in seeing any new comments on older entries, but the RSS file doesn’t even tell me how many comments there are so I can’t tell if there are any new ones

3) Inconsistent HTML usage. Some sites put HTML within the RSS entry, but some don’t, which is important on sites where things like accompanying images are important to the entry.

As a result I usually wind up visiting the site manually anyway to get the proper layout, images, comments, etc., so checking in the RSS aggregator first didn’t really do any good.

I can see one area in which RSS would be quite useful, when you want to check large numbers of infrequently-updated sites for new entries without having to visit every single one. It just doesn’t do much to help me out. It sounds great in theory, so maybe it’s just the implementations and usage of it that’s not up to snuff yet.

Schadenfreude

I’m sure by now everyone and their dog has heard about the Windows NT/2K source code being leaked, and I have to say, I feel a bit sorry for them.

Now before you bite my head off, no, I’m not going soft on them. Windows still sucks technically, their business practices are still shady, and I still try to avoid buying from them when I can. It’s still their code though, and it’s their right to control it. By application of The Golden Rule, I can’t condone something happening to them that I wouldn’t want to happen to myself. If our company code ever got leaked, I’d probably crawl under a rock and die of embarrassment at having all of my coding sins exposed to the world.

Now that it’s out though, will I look at it? Hell no, that code is poison to any professional programmer. The last thing I need is Microsoft out to ruin my professional career just because there’s even the slightest suspicion I may have glanced at it, or to have any future contributions to open source projects subject to suspicion of being tainted knowledge. Just look at what SCO’s up to to see where that leads.

The Dark Side Of The Net

You learn an awful lot when you run your own site, and it’s mostly driven by what people try to do to you. This week’s lesson was about ‘dark crawlers,’ web spiders that don’t play by the rules.

Any program that crawls the web for information (mostly search engines), is supposed to follow the robots exclusion standard kept in the robots.txt file. Not every part of a web site is suitable for crawling — for example, the /cgi-bin/mt-comments.cgi… links on my site would just contain redundant information already in the main articles and would place extra load on the server — so you use that file to tell crawlers which parts of your site not to bother loading. You can also use it to stop crawlers that are behaving in ways you don’t like (e.g., polling too often) based on a unique part of their user agent name.

Adherence to that standard is entirely voluntary though, and some crawlers ignore it entirely. Yesterday I noticed a large number of hits coming from two class-C subnets. Although they appeared to be regular web browsers by their user agents (one Mac, one Windows), they were very rapidly working their way through every article on the site, in numerical order. A Google check on the IP address range quickly revealed that they were part of a company called ‘Web Content International’ which is apparently notorious for this kind of dark crawling.

A couple of new firewall rules to drop packets from them stopped their crawling, but then my kernel logs were getting flooded with firewall intrusion notices. They were apparently content to sit there retrying every few seconds for however long it took to get back in. Allowing them back in and having Apache return a 403 Forbidden page to requests from their address ranges instead seemed to finally make them stop.

Is that kind of crawling really that bad? Crawling is a natural web activity now, the site is public information, and these guys probably don’t intend any harm, so it doesn’t seem *too* bad. There are worse offenders out there too, such as dark crawlers that specifically go into areas marked as ‘Disallowed’ in robots.txt even if they weren’t part of the original crawl, hoping to find juicy details like e-mail addresses to spam. Still, if they want to crawl they should be open about admitting they’re doing so by following the robot standards. If they’re going to be sneaky about getting my data, I’m perfectly within my rights in being sneaky in denying it to them.

Nibbles-n-bits-n-bits-n-bits-n-bits-n-…

Okay, having addressed 64-bit hardware the question remains, who cares? What does it actually mean to you?

Well, nothing really. For all the end-user knows the system could be 8-bit or 500-bit or whatever internally, and it doesn’t matter. Linux or Windows or whatever you use will still look, feel, and run the same way. The only people it really makes a big difference to are the programmers, but in a roundabout way it does eventually wind up affecting the end-user.

Continue reading “Nibbles-n-bits-n-bits-n-bits-n-bits-n-…”

Smaller Is Better?

Yeesh, I’m falling behind on all the tech news. I only just found out about the BTX form factor which is supposed to be available fairly soon.

It sounds great on paper, allowing smaller cases, better cooling of components, more silent operation, etc., but already my hands are cringing in fear. Why? Because every time I open one of my cases to work on the internals, I wind up getting cuts on my hands from *somewhere*. I’m still not entirely sure where and I may not even notice until hours later, but something inside those cases is trying to murder me. Or cut my fingers off, at least. Smaller cases are just going to make it even worse!

I don’t think this is what they meant by progress sometimes requiring the sacrifice of blood…

Is This Irony Or Silicony?

AMD has actually been around for quite a while, but it’s their line of Intel-compatible processors that they’re now most famous for. Now however, Intel will be making AMD-compatible processors…

What happened? Basically, Intel lost the 64-bit race.

Continue reading “Is This Irony Or Silicony?”

Vidiot

All I wanted to do was convert some clips… I’ve collected a number of little bits of video over the years, but they’re sometimes a bit of a pain to deal with. They’re in a zillion different formats, have to be played back on the computer, are often too small on the monitor and don’t look good when blown up to full screen, and need something a bit faster than my 400 MHz Linux box anyway. (The other, faster one’s often in XP for games.)

Fortunately there’s an easy solution: Video CDs! Simply convert everything to MPEG files, burn them to CD, and I can play them on my DVD player instead.

It turns out that video is a bit more complex than I had anticipated…

Continue reading “Vidiot”

Watch Those Wildcards

A RAID array may save your data from drive failure, but it won’t protect it from brain farts.

heide:/media/video/anime$ mkdir tmp ; cd tmp

heide:/media/video/anime/tmp$ cp ../bagi_part*

heide:/media/video/anime/tmp$ ls

heide:/media/video/anime/tmp$Wait, what did I just do…

I just copied the part 1 file over top of the part 2 file, that’s what… Gotta be careful with those wildcard expansions, especially when ‘cp’ and ‘mv’ are involved. (In this case I forgot the ‘.’ parameter at the end to tell it to copy the files here instead of over each other.)

Fortunately I hadn’t wiped the Linux partition on the old system yet and could recopy it back from there. Laziness pays off sometimes…

How Do I Hate Thee? Let Me Count The Ways…

Lotus Notes must die. It was pushed onto us when we got bought out by our parent company and we had to integrate with their e-mail system, and it’s come to be universally loathed around the office. Outlook/Exchange had their own fair share of problems, but at least they were well-known and livable.

Continue reading “How Do I Hate Thee? Let Me Count The Ways…”

Biting the Apple

Looking around at a lot of people I know, it seems like I’m the only one *not* using or at least praising the Mac. Why haven’t I taken the plunge yet? Well, it’s not any “OS A sucks, B rulez!!1!” mentality; OSX sounds just great from most reports so far.

I already have two working systems, a full-time Linux server for ‘work’ and a dual boot XP/Linux system for gaming and other CPU-intense tasks, and between them I have pretty much all of my needs covered. Whenever I upgrade it’s almost always to get better gaming performance, not for experimental systems. I don’t really have the room for a new system and I’m not about to *replace* a whole system with a relatively unknown (to me) one. I just don’t have any big incentive to switch or add in a Mac yet.

Except maybe in one spot… The one thing I’m still missing is a laptop. It’s always been a fairly low priority item for me since I don’t travel much, but there’s the odd time it might be useful. Since the tasks it would need to be able to handle would be pretty basic (store files, access USB devices, basic net connectivity), a Mac would cover those basic tasks pretty well while still letting me have one to tinker with.

Will I? Eh, depends on how lazy I’m feeling when/if I ever get around to it. :-P It probably wouldn’t even be a top-end model; something like the 14″ iBook would likely suffice. I’m certainly not about to shell out $4300 for this one…

Murphy Is Laughing At Me

Yeesh. My server had been up for almost a full month since the last reboot, but I go away for a week and what happens? A mere eight hours after leaving the house, one of the hard drives flakes out and leaves the system hung for the rest of the week…

Now I Just Need A Virus Ninja

The War on Spam is getting to the point where you need better automated tools; having to manually adjust procmail filters for every new trick was quickly becoming annoying.

So, I finally decided to give SpamAssassin a whirl. It’s always been highly recommended but I’d been a bit hesitant to use it since it looked like it might be overkill. It’s the kind of thing you install to handle spam across entire corporate networks (we use it at work here too), so I was expecting something sendmail-like in its difficulty to configure and admin. It turned out to be pretty painless, though — do the ‘make install’, set up the .forward and .procmailrc files (the samples included work just fine), and ta-da, you’re done and your e-mail is now being spam-filtered.

The important question is, of course: does it work? The answer is yes…and no. So far it has caught a good number of spam messages and not accidentally flagged any valid e-mail as false positives. There are however still a few types of messages making it through the filter:

1) Viruses. Unfortunately Swen and its ilk are still circulating around the net far too much, and with little text in the message to parse and none of the spammer’s tricks being used, it’s hard for SpamAssassin to catch these. Technically it’s not really SA’s job to catch viruses; I’ll have to find another package and use it to do additional virus filtering instead.

2) Short, generic spam. These are those messages with vague subjects like “hi”, “lose it”, “can u spend few mins?” etc. and worded like a friendly greeting. Since most of the usual spammer’s tricks are missing, SA can’t judge these very well.

However, there is hope for the latter case: SA uses ‘Bayesian filtering’ to attempt to learn what spam looks like. If I keep feeding messages like those to the database, it should eventually start to be able to tell the difference between them and valid e-mail and start filtering them automatically. In theory, anyway. Only time will tell how well it will work, as it has to build up a history first.

In the meantime I still have to handle those viruses and some spam messages manually. It’s still better than no filtering at all though, and using the SA tools to teach it about the spam it misses isn’t too much of a hassle.

You Don’t Get What You Don’t Pay For

I finally got around to buying a proper set of headphones for my portable music. Unfortunately, I was focusing primarily on making sure that they were light and unobtrusive — I have a fantastic quality set of headphones at home already, but they’re big and bulky and shut out nearly all other sound, which doesn’t make them very practical for day-to-day casual use. That led me to a set of Sony headphones that only cost around $16, but they seemed to be just what I was looking for.

Of course, they turned out to be utter crap. Too much of the sound leaks away, especially high frequencies, the headband is some cheap plastic that I swear is going to break within a month, and the jack is ultra-quirky. The slightest shift causes crackling, volume fading, and the right channel vanishing entirely.

I’m probably going to have to go down to somewhere like A&B Sound to find a *true* decent set of headphones that won’t break easily, sound good, and work reliably, and it’ll probably cost an arm and a leg. Of course, there are limits…

Why Windows CE Sucks, Part 137

I was getting a bit tired of the same old playlist on my PocketPC, so I took over 400 of my favourite MP3s, transcoded them to ~96kbps Ogg Vorbis format, and wrote a script to randomly pick out around 256 megs worth of them each time it was run, to build new playlists that would fit in the memory card. The hardest part of all that was not the transcoding, or writing the script; it was just getting the damn files transferred to the PocketPC.

About the only way to transfer files to it is to open it up under Explorer, and drag and drop files into the folder you want, and the ActiveSync program takes care of copying them over. However, attempting to do so would invariably halt and cause the USB connection to break after three or four files were copied. I’d have to resync, delete the partially copied file, and restart the copy where it left off. That would be merely annoying except that after a while it wouldn’t just break the connection, but it would also corrupt the memory card.

Now I had to reformat the memory card, but guess what, Windows CE doesn’t include any way to format cards. I had to go looking for utilities on the net, and most of the ones I ran across were commercial bundles of utilities, and I wasn’t about to shell out money just to format this stupid card. I finally found a free set of tools to do the formatting, and could use the card again.

I also finally found out that other people were having the same problem, and that there was a patch available for ActiveSync that fixes problems with large file transfers, and applied it. Now the files are transferring properly and there haven’t been any breaks or corruption yet, but it’s going very slowly at around 100K/s. Even with an old USB 1.0 connection it should be ten times faster…

Though in the end it’s now working, it’s frustrating that it had to be so much trouble in the first place. Devices like PDAs are supposed to just work right out of the box, but they couldn’t even get something as basic as transferring files working properly…

What The Hell Is J0684IDX.AUT For?

It’s amazing how much crap accumulates on our computers. While working on something, I hit an unexpected ‘out of disk space’ error, so I started poking around trying to find stuff to get rid of so I could free up some space. It didn’t take long, and I figured I may as well spend a bit of time tidying up my whole system.

After I was finished I had deleted over 19 gigs of files. This system only has a 40 gig drive to begin with. Multi-megabyte trace files from bugs long since fixed, database snapshots from customers from years ago, source code from previous versions that’s no longer needed, online manuals I’d saved to disk and only used briefly, ten-line test programs written to quickly check something, software installed and used once and then forgotten, temp files from programs that failed to clean up properly, and all sorts of other junk was hiding about in the nooks and crannies of the filesystem.

It’s especially fun when you find files with no immediately obvious use, in a vaguely-named folder, with no documentation. Anything I couldn’t figure out within 30 seconds got thrown in the recycle bin.

Of course it’ll probably only take me a couple more months to fill the drive back up with junk again…

Hey, Nice Beaver!

The latest 2.6.0-test10 Linux kernel has been released and is expected to be the last in the test series before an official release, and in keeping with old traditions like the ‘greased weasel’ series, Linus has christened this one with the name ‘stoned beaver.’

(*eyes Linus suspiciously*)